Introduction

My role at Cisco is transitioning to enterprise so I won’t be working on Nexus switches much any more. I figured it would be a good time to finish this article on DCNM. In my previous article, I talked about DCNM’s overlay provisioning capabilities, and explained the basic structure DCNM uses to describe multi-tenancy data centers. In this article, we will look at the details of 802.1q-triggered auto-configuration, as well as VMtracker-based triggered auto-configuration. Please be aware that the types of triggers and their behaviors depends on the platform you are using. For example, you cannot do dot1q-based triggers on Nexus 9k, and on Nexus 5k, while I can use VMTracker, it will not prune unneeded VLANs. If you have not read my previous article, please review it so the terminology is clear.

Have a look at the topology we will use:

The spine switches are not particularly relevant, since they are just passing traffic and not actively involved in the auto-configuration. The Nexus 5K leaves are, of course, and attached to each is an ESXi server. The one on the left has two VMs in two different VLANs, 501 and 502. The 5k will learn about the active hosts via 802.1q triggering. The rightmost host has only one VM, and in this case the switch will learn about the host via VMtracker. In both cases the switches will provision the required configuration in response to the workloads, without manual intervention, pulling their configs from DCNM as described in part 1.

Underlay

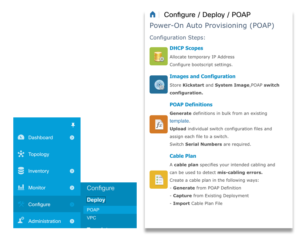

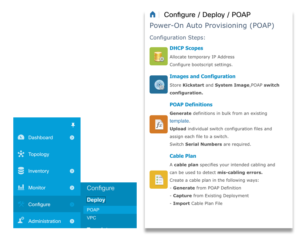

Because we are focused on overlay provisioning, I won’t go through the underlay piece in detail. However, when you set up the underlay, you need to configure some parameters that will be used by the overlay. Since you are using DCNM, I’m assuming you’ll be using the Power-on Auto-Provision feature, which allows a switch to get its configuration on bootup without human intervention.

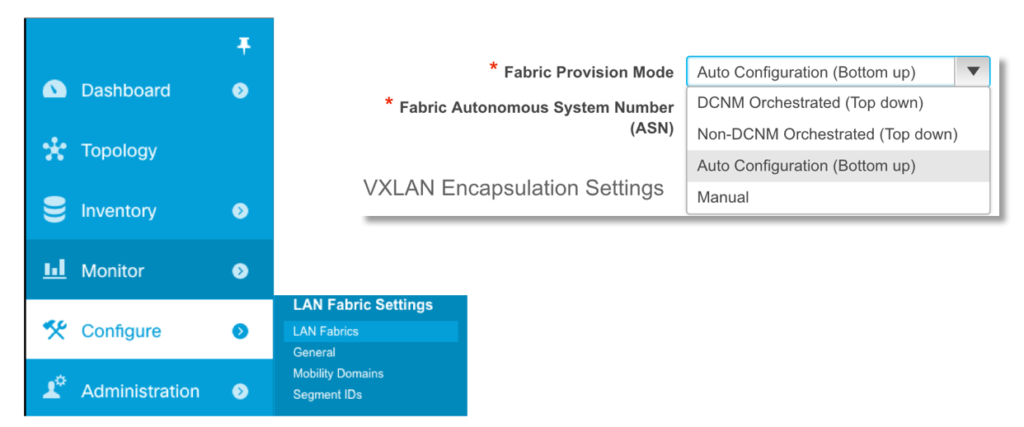

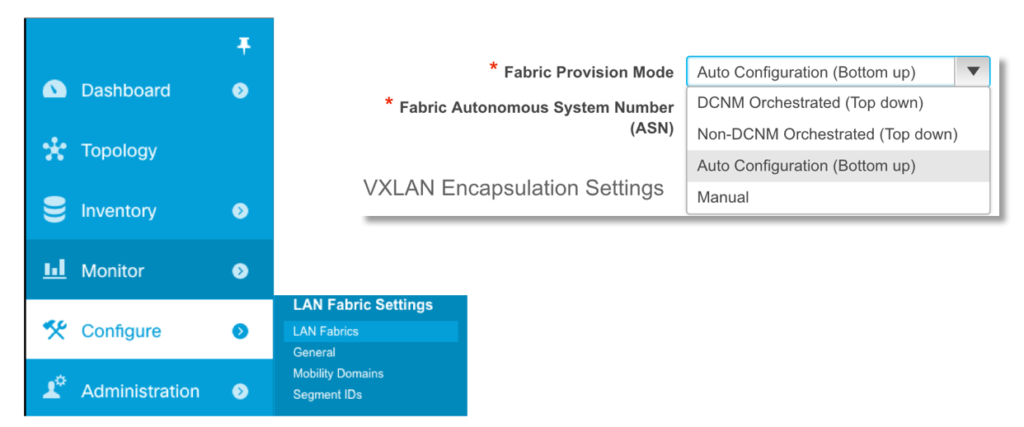

Recall that a fabric is the highest level construct we have in DCNM. The fabric is a collection of switches running an encapsulation like VXLAN or FabricPath together. Before we create any PoAP definitions, we need to set up a fabric. During the definition of the fabric, we choose the type of provisioning we want. Since we are doing auto-config, we choose this option as our Fabric Provision Mode. The previous article describes the Top Down option.

Next, we need to build our PoAP definitions. Each switch that is configured via PoAP needs a definition, which tells DCNM what software image and what configuration to push. This is done from the Configure->PoAP->PoAP Definitions section of DCNM. Because generating a lot of PoAP defs for a large fabric is tedious, DCNM10 also allows you to build a fabric plan, where you specify the overall parameters for your fabric and then DCNM generates the PoAP definitions automatically, incrementing variables such as management IP address for you. We won’t cover fabric plans here, but if you go that route the auto-config piece is basically the same.

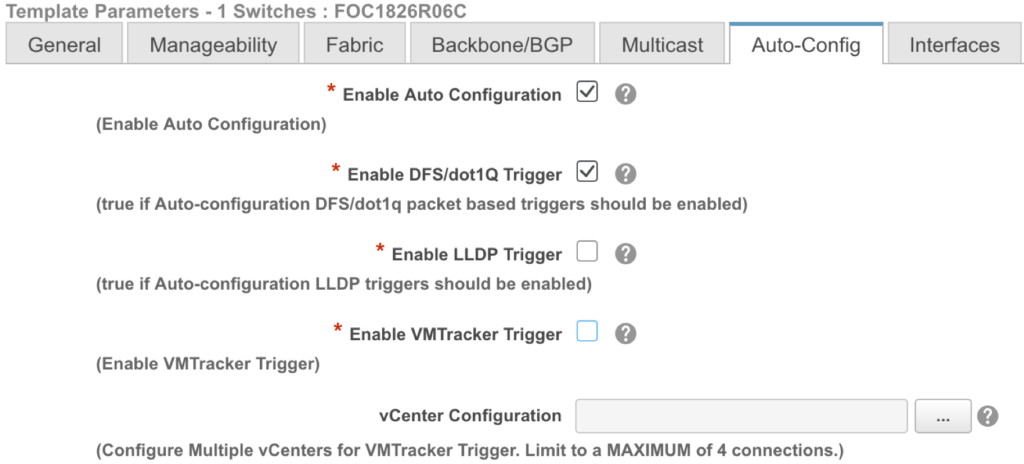

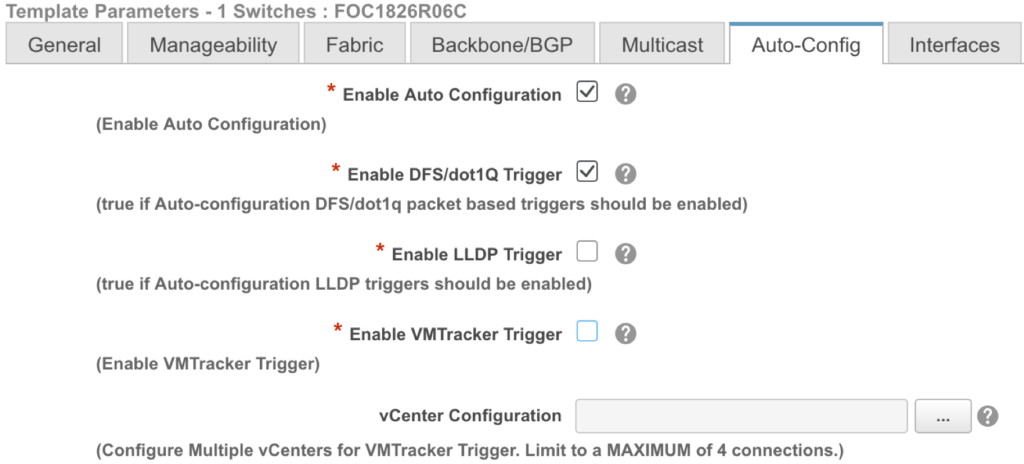

Once we are in the PoAP definition for the individual switch, we can enable auto-configuration and select the type we want.

In this case I have only enabled the 802.1q trigger. If I want to enable VMTracker, I just check the box for it and enter my vCenter server IP address and credentials in the box below. I won’t show the interface configuration, but please note that it is very important that you choose the correct access interfaces in the PoAP defs. As we will see, DCNM will add some commands under the interfaces to make the auto-config work.

Once the switch has been powered on and has pulled down its configuration, you will see the relevant config under the interfaces:

n5672-1# sh run int e1/33

interface Ethernet1/33

switchport mode trunk

encapsulation dynamic dot1q

spanning-tree port type edge trunk

If the encapsulation command is not there, auto-config will not work.

Overlay Definition

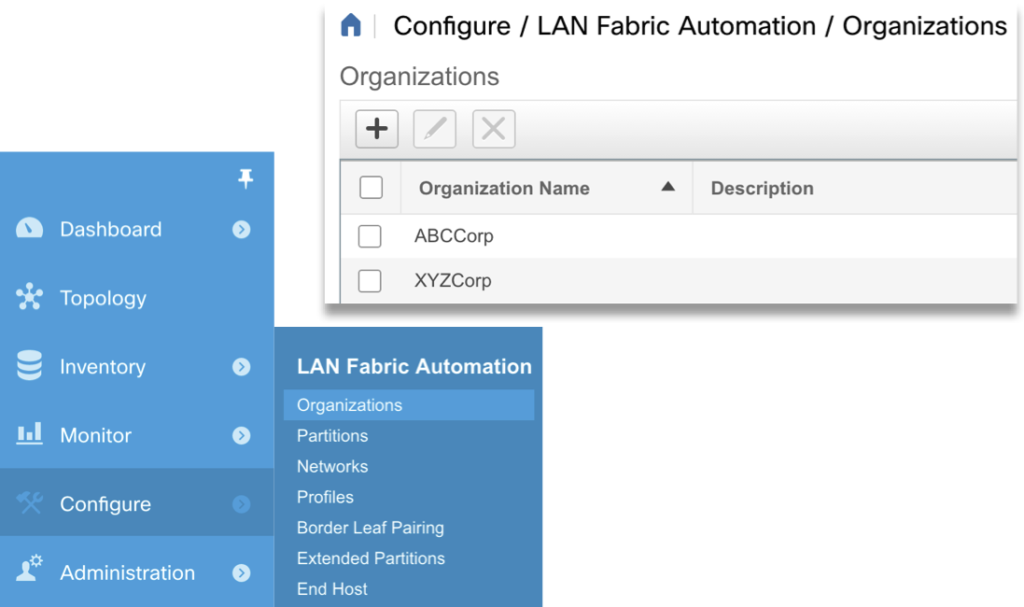

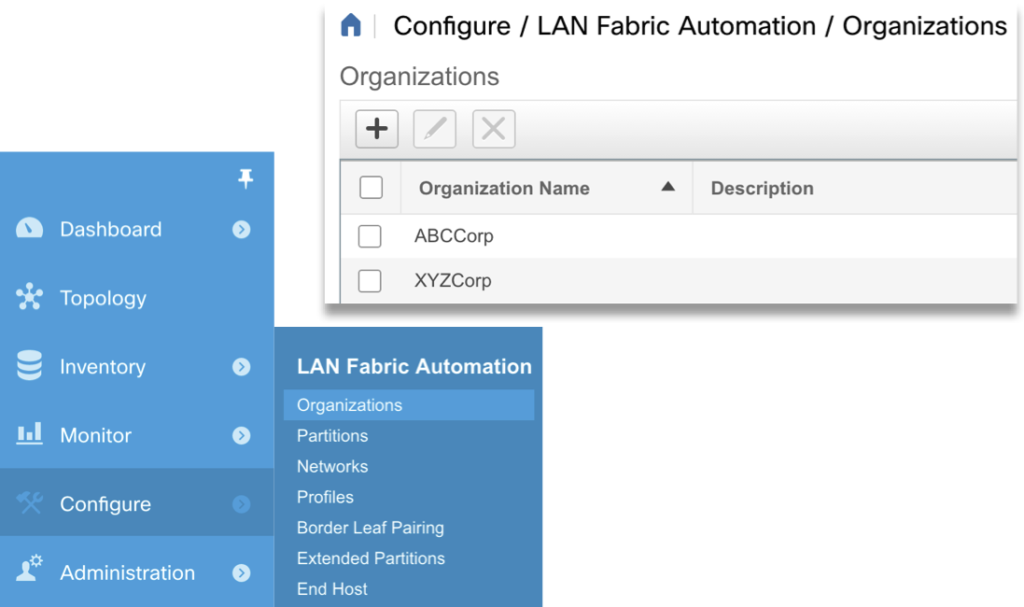

Remember from the previous article that, after we define the Fabric, we need to define the Organization (Tenant), the Partition (VRF), and then the Network. Defining the organization is quite easy: just navigate to the organizations screen, click the plus button, and give it a name. You may only have one tenant in your data center, but if you have more than one you can define them here. (I am using extremely creative and non-trademark-violating names here.) Be sure to pick the correct Fabric name in the drop-down at the top of the screen; often when you don’t see what you are expecting in DCNM, it is because you are not on the correct fabric.

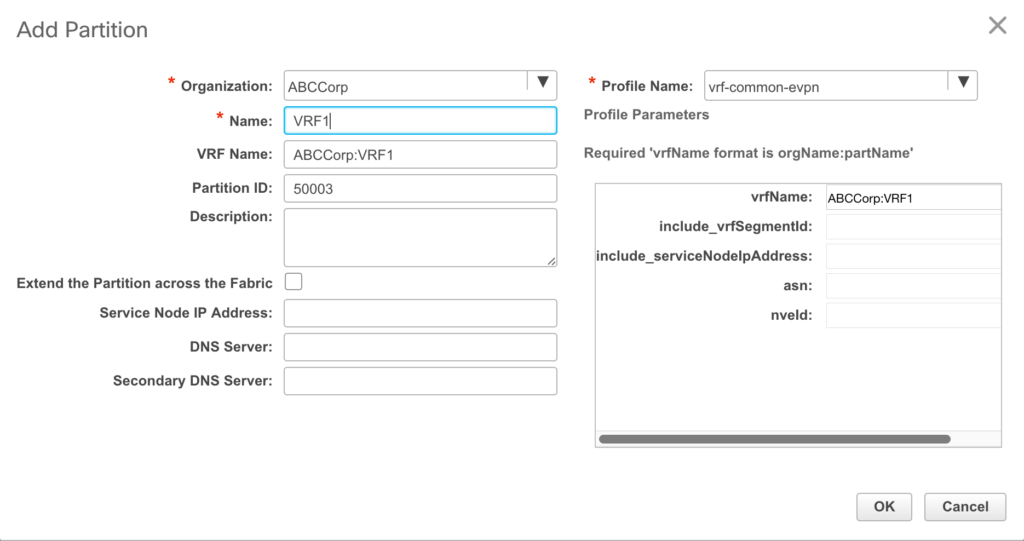

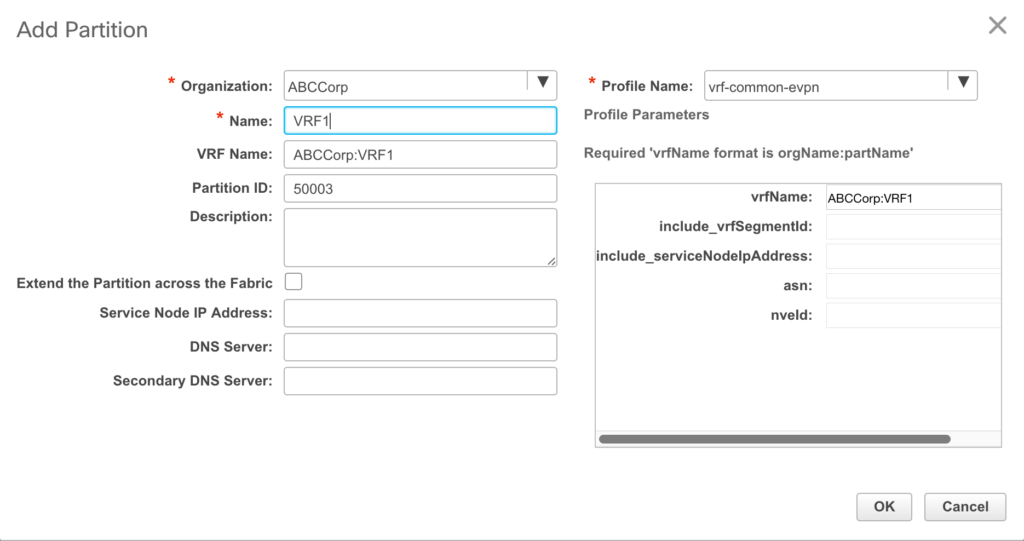

Next, we need to add the partition, which is DCNM’s name for a VRF. Remember, we are talking about mutlitenancy here. Not only do we have the option to create multiple tenants, but each tenant can have multiple VRFs. Adding a VRF is just about as easy as adding an organization. DCNM does have a number of profiles that can be used to build the VRFs, but for most VXLAN fabrics, the default EVPN profile is fine. You only need to enter the VRF name. The partition ID is already populated for you, and there is no need to change it.

There is something important to note in the above screen shot. The name given to the VRF is prepended with the name of the organization. This is because the switches themselves have no concept of organization. By prepending the org name to the VRF, you can easily reuse VRF names in different organizations without risk of conflict on the switch.

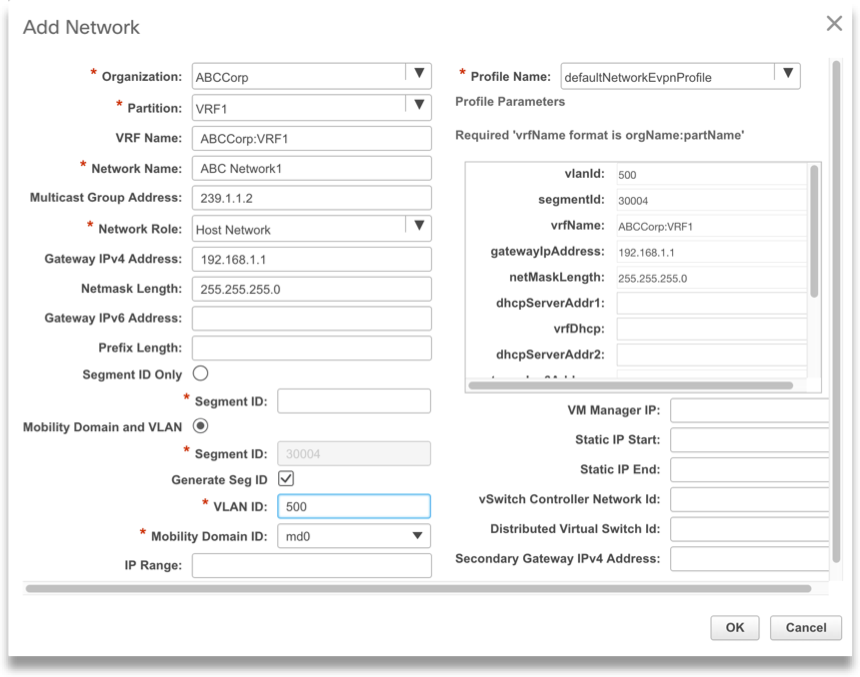

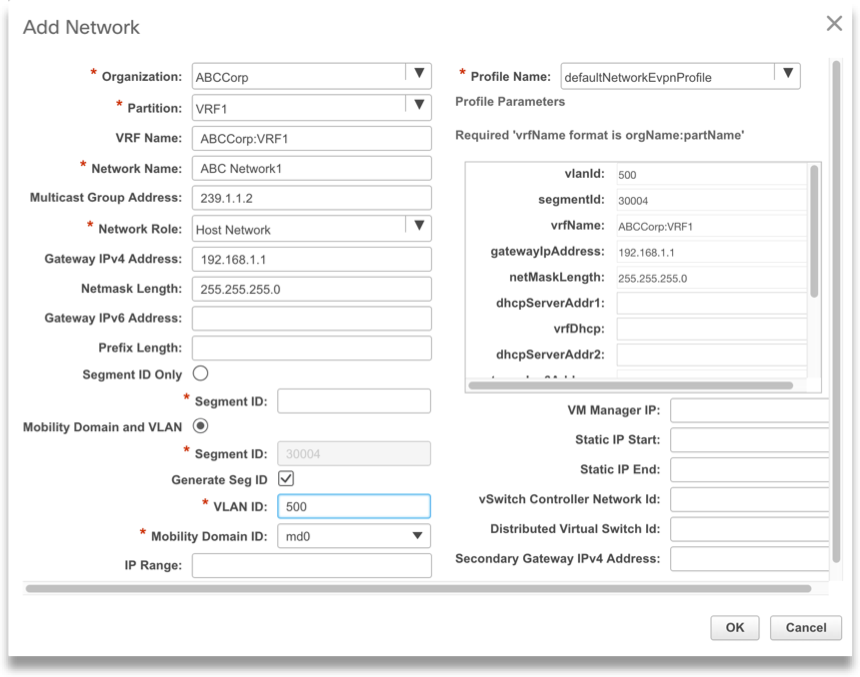

Finally, let’s provision the network. This is where most of the configuration happens. Under the same LAN Fabric Automation menu we saw above, navigate to Networks. As before, we need to pick a profile, but the default is fine for most layer 3 cases.

Once we specify the organization and partition that we already created, we tell DCNM the gateway address. This is the Anycast gateway address that will be configured on any switch that has a host in this VLAN. Remember that in VXLAN/EVPN, each leaf switch acts as a default gateway for the VLANs it serves. We also specify the VLAN ID, of course.

Once this is saved, the profile is in DCNM and ready to go. Unlike with the underlay config, nothing is actually deployed on the switch at this point. The config is just sitting in DCNM, waiting for a workload to become active that requires it. If no workload requires the configuration we specified, it will never make it to a switch. And, if switch-1 requires the config while switch-2 does not, well, switch-2 will never get it. This is the power of auto-configuration. It’s entirely likely that when you are configuring your data center switches by hand, you don’t configure VLANs on switches that don’t require them, but you have to figure that out yourself. With auto-config, we just deploy as needed.

Let’s take a step back and review what we have done:

- We have told DCNM to enable 802.1q triggering for switches that are configured with auto-provisioning.

- We have created an organization and partition for our new network.

- We have told DCNM what configuration that network requires to support it.

Auto-Config in Action

Now that we’ve set DCNM up, let’s look at the switches. First of all, I verify that there is no VRF or SVI configuration for this partition and network:

jemclaug-hh14-n5672-1# sh vrf all

VRF-Name VRF-ID State Reason

default 1 Up --

management 2 Up --

jemclaug-hh14-n5672-1# sh ip int brief vrf all | i 192.168

jemclaug-hh14-n5672-1#

We can see here that there is no VRF other than the default and management VRFs, and there are no SVI’s with the 192.168.x.x prefix. Now I start a ping from my VM1, which you will recall is connected to this switch:

jeffmc@ABC-VM1:~$ ping 192.168.1.1

PING 192.168.1.1 (192.168.1.1) 56(84) bytes of data.

64 bytes from 192.168.1.1: icmp_seq=9 ttl=255 time=0.794 ms

64 bytes from 192.168.1.1: icmp_seq=10 ttl=255 time=0.741 ms

64 bytes from 192.168.1.1: icmp_seq=11 ttl=255 time=0.683 ms

Notice from the output that the first ping I get back is sequence #9. Back on the switch:

jemclaug-hh14-n5672-1# sh vrf all

VRF-Name VRF-ID State Reason

ABCCorp:VRF1 4 Up --

default 1 Up --

management 2 Up --

jemclaug-hh14-n5672-1# sh ip int brief vrf all | i 192.168

Vlan501 192.168.1.1 protocol-up/link-up/admin-up

jemclaug-hh14-n5672-1#

Now we have a VRF and an SVI! As I stated before, the switch itself has no concept of organization, which is really just a tag DCNM applies to the front of the VRF. If I had created a VRF1 in the XYZCorp organization, the switch would not see it as a conflict because it would be XYZCorp:VRF1 instead of ABCCorp:VRF1.

If we want to look at the SVI configuration, we need to use the expand-port-profile option. The profile pulled down from DCNM is not shown in the running config:

jemclaug-hh14-n5672-1# sh run int vlan 501 expand-port-profile

interface Vlan501

no shutdown

vrf member ABCCorp:VRF1

ip address 192.168.1.1/24 tag 12345

fabric forwarding mode anycast-gateway

VMTracker

Let’s have a quick look at VMTracker. As I mentioned in this blog and previous one, dot1q triggering requires the host to actually send data before it auto-configures the switch. The nice thing about VMTracker is that it will configure the switch when a VM becomes active, regardless of whether it is actually sending data. The switch itself is configured with the address of and credentials for your vCenter server, so it becomes aware when a workload is active.

Note: Earlier I said you have to configure the vCenter address and credentials in DCNM. Don’t be confused! DCNM is not talking to vCenter, the Nexus switch actually is. You only put it in DCNM if you are using Power-on Auto-Provisioning. In other words, DCNM will not establish a connection to vCenter, but will push the address and credentials down to the switch, and the switch establishes the connection.

We can see the VMTracker configuration on the second Nexus 5K:

jemclaug-hh14-n5672-2(config-vmt-conn)# sh run | sec vmtracker

feature vmtracker

encapsulation dynamic vmtracker

vmtracker fabric auto-config

vmtracker connection vc

remote ip address 172.26.244.120

username administrator@vsphere.local password 5 Qxz!12345

connect

The feature is enabled, and the “encapsulation dynamic vmtracker” command is applied to the relevant interfaces. (You can see the command here, but because I used the “| sec” option to view the config, you cannot see what interface it is applied under. We can see that I also supplied the vCenter IP address and login credentials. (The password is sort-of encrypted.) Notice also the connect statement. The Nexus will not connect to the vCenter server until this is applied. Now we can look at the vCenter connection:

jemclaug-hh14-n5672-2(config-vmt-conn)# sh vmtracker status

Connection Host/IP status

-----------------------------------------------------------------------------

vc 172.26.244.120 Connected

We have connected successfully!

As with dot1q triggering, there is no VRF or SVI configured yet for our host:

jemclaug-hh14-n5672-2# sh vrf all

VRF-Name VRF-ID State Reason

default 1 Up --

management 2 Up --

jemclaug-hh14-n5672-2# sh ip int brief vrf all | i 192.168

We now go to vSphere (or vCenter) and power up the VM connected to this switch:

Once we bring up the VM, we can see the switch has been notified, and the VRF has been automatically provisioned, along with the SVI.

jemclaug-hh14-n5672-2# sh vmtracker event-history | i VM2

761412 Dec 21 2016 13:43:02:572793 ABC-VM2 on 172.26.244.177 in DC4 is powered on

jemclaug-hh14-n5672-2# sh vrf all | i ABC

ABCCorp:VRF1 4 Up --

jemclaug-hh14-n5672-2# sh ip int brief vrf all | i 192

Vlan501 192.168.1.1 protocol-up/link-up/admin-up

jemclaug-hh14-n5672-2#

Thus, we had the same effect as with dot1q triggering, but we didn’t need to wait for traffic!

I hope these articles have been helpful. Much of the documentation on DCNM right now is not in the form of a walk-through, and while I don’t offer as much detail, hopefully these articles should get you started. Remember, with DCNM, you get advanced features free for 30 days, so go ahead and download and play with it.